Samsung Labs has a new AI face-changing tool called MegaPortraits that has exploded abroad. In short, this AI tool can capture the facial movements of real people, so that static portraits can imitate the expressions and actions of real people and make them “move”.

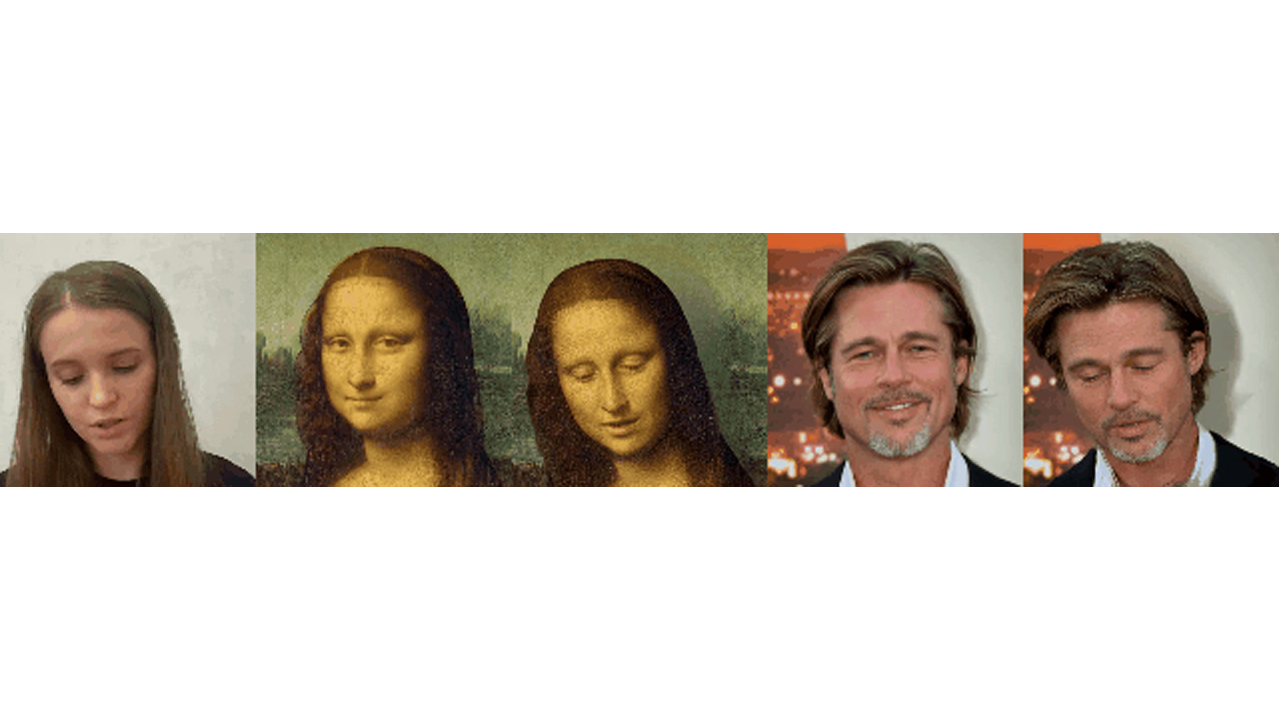

From the GIF, we can see that a video can make the original static picture move, and even show the outline of the side face. These are the pictures that the MegaPortraits AI face-changing tool “brain fills” by itself. Any subtle Expressions can be “souled” into the characters in the picture as if it were originally a video, and no flaws can be seen.

JOIN TIP3X ON TELEGRAM

The technique works by blending the source image with the motion of the “driving image”, and then embedding the motion of the “driving image” into the source image. The AI model that generates the motion of each frame is derived from the source image and the “driving image”. It is trained from two random frames of “driving image”, where the “driving image” refers to the leftmost real person in the above two sets of GIFs.

The appearance of the “driving image” and its motion are processed separately by the model before being projected onto the original image. In this work, Samsung took its neural head avatar technology to megapixel resolution. High-resolution avatars are designed to animate pictures no matter how much the real person looks in the picture. It doesn’t need to be similar in face shape or skin tone like other deepfake software needs to imitate.

So even when the appearance of the “driving image” is very different from the appearance of the source image, medium-resolution video data and high-resolution image data can be used to achieve the desired level of rendered image quality and variation in motion.

The MegaPortraits researchers explain: “Our training setup is relatively standard, and we sample two frames from our dataset at each step: a source frame and a drive frame. Our model will drive the motion of the frame (i.e. head pose and face expressions) are imposed on the appearance of the source frame to produce the output image.”

They also stated: “The main learning signal is obtained from the training set where the source and driving frames are from the same video, so our model predictions are trained to match the driving frames.”

Moreover, MegaPortraits researchers said that the current MegaPortraitsAI face-changing tool does not perform well in the shoulders and clothing areas, and they will focus on improving this problem in the future.